Slow things suck! No one ever said they are okay with slow. We are also a bunch of folks who are fanatic about speed and slow things just put us off.

This update is the result of the last 2 years of efforts by our engineering and product team. Gumlet is now fast at almost everything you do. We made website, dashboard, image processing, video processing and streaming, API, support, CI/CD pipeline and cron-jobs fast.

That's a lot of things (almost everything in our tech) but to make sure users feel the speed, we had to overhaul almost every aspect of our tech stack.

Website

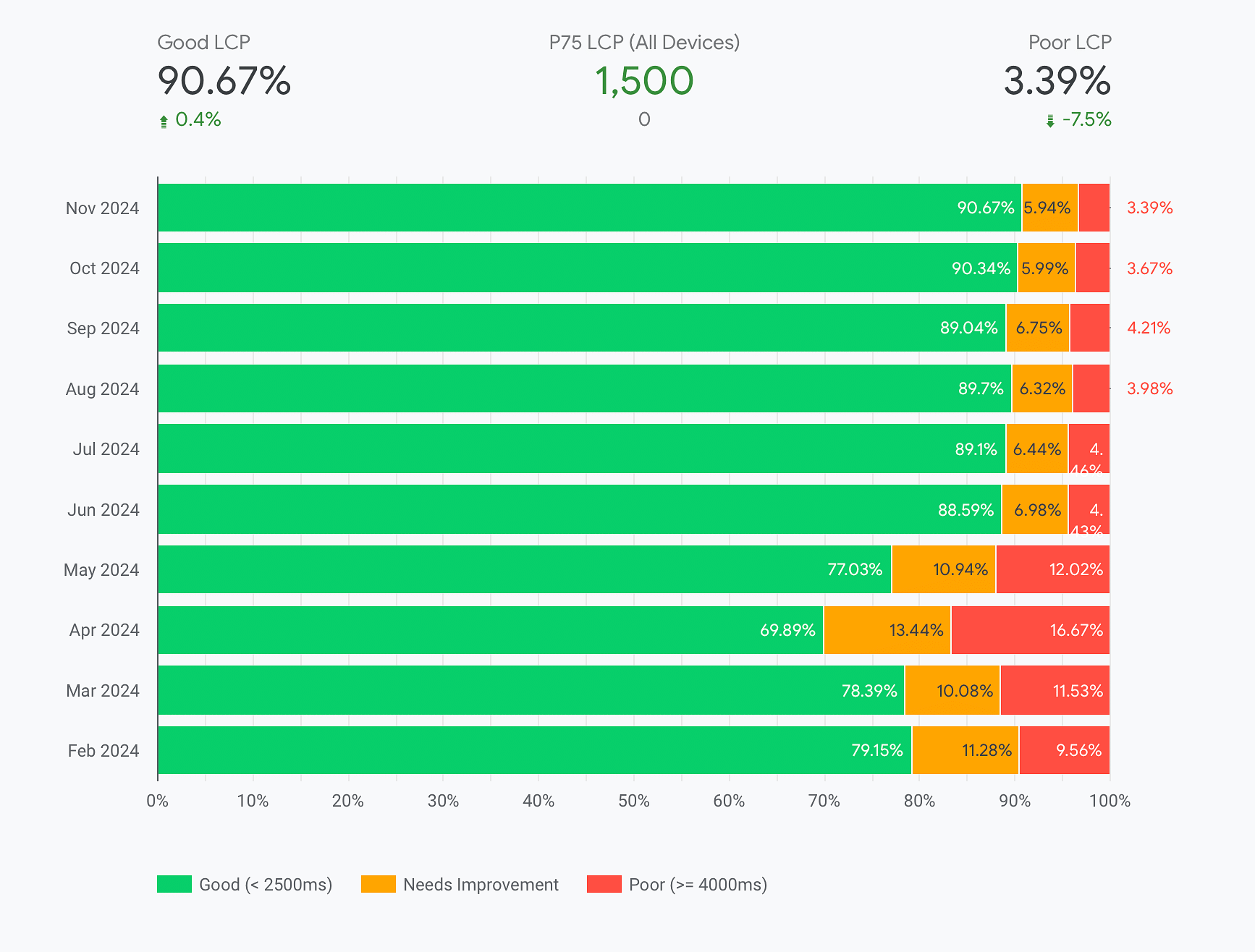

This is obviously the one how users and prospects understand about our product first so this one is the most crucial.

Old stack presented problem of website being slow, extremely hard to maintain javascript and engineering time spent on website update. This stack also made it challenging to be SEO optimised as many-a-times we had very high LCP and FCP.

| Area | Old | New |

|---|---|---|

| Server | express server |

Next.js, Strapi CMS |

| DB | None | Postgres on RDS |

| CSS Framework | Bootstrap | Tailwind CSS |

Next.js makes the website extremely fast. The pages are pre-rendered and there is pre-fetch for pages. Tailwind makes the CSS clean and very compressed to solve the SEO issue. Strapi made sure our marketing team can publish new pages in days compared to weeks it took before and edits can be done in minutes. This also frees up all the developer time. Did we mention it became beautiful?

Head over to Gumlet.com to experience it yourself.

Dashboard

Post signup, you land on Gumlet dashboard. Every single interaction with product is done here.

| Area | Old | New |

|---|---|---|

| Server | express server |

Amazon S3 |

| DB | MongoDB | MongoDB |

| Render | Server side & jQuery | ReactJS |

| Backend | express server rendering .pug templates |

Node.js REST API |

| CSS | Bootstrap | Bootstrap (soon moving to tailwind) |

Server side rendering had a ton of issues. It's not interactive, loads very slow as users navigate different pages and above all, the jQuery code has tendency to quickly turn into spaghetti. The new dashboard is super snappy. Interactions happen in under 350ms and even first loading takes a fraction of second.

One of the best things that we achieved was separation of data layer and presentation layer. The data is provided by our REST API which can be delegated to a backend team and presentation is sole responsibility of frontend team. Win!

Image Processing

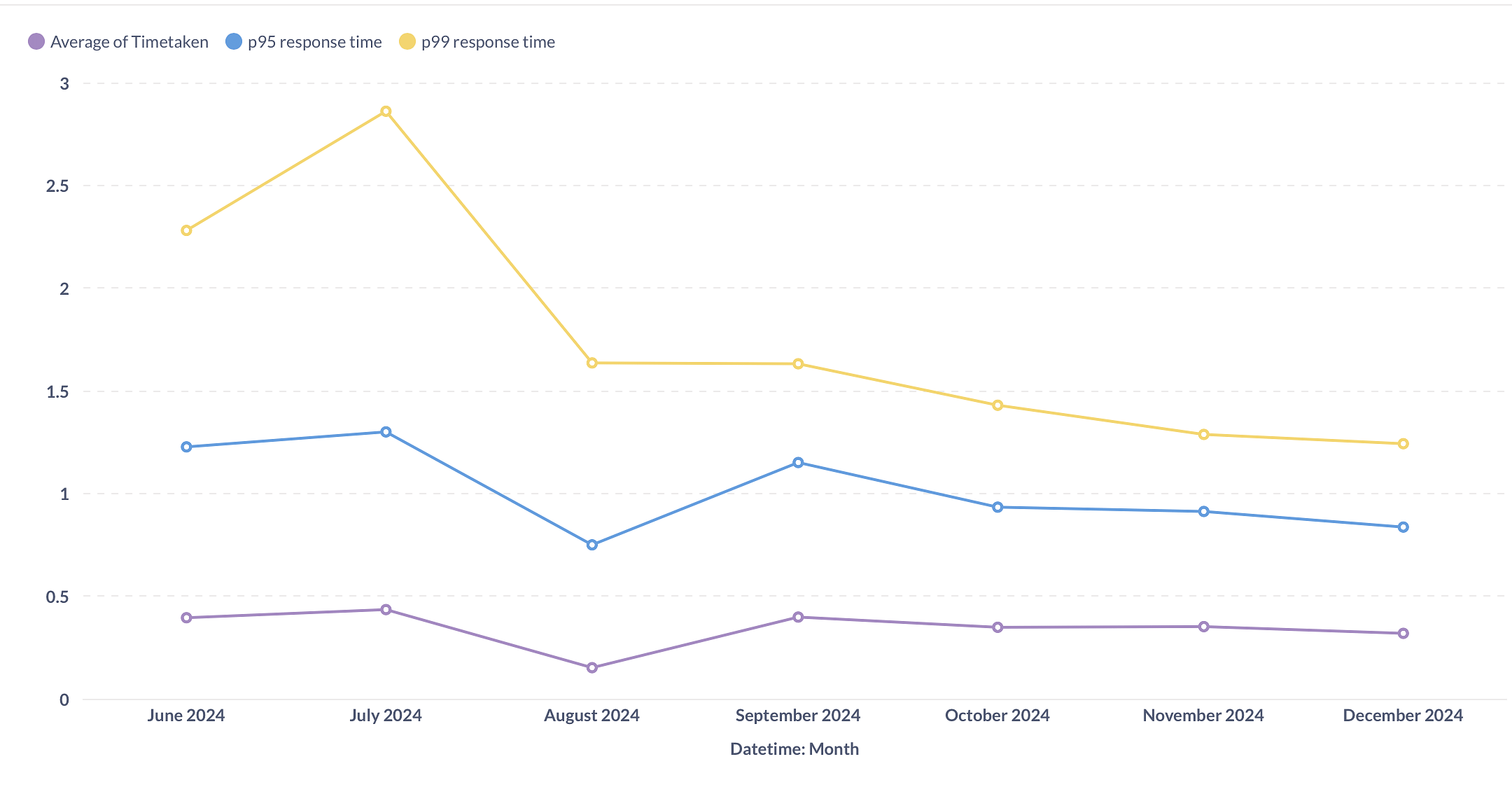

This is one of our core products. We deliver more than 1.5 Billion images every single day and all of the images processed are processed on-the-fly. It means we don't have a scope for a single second downtime. Our customers use more than 40 different types of operations and all of them needs to be fast. On top of this, we are the first one to introduce AVIF and JPEG-XL formats as outputs and while these formats are very efficient, encoding images in these formats is computationally expensive.

Image processing speed directly affects our customers as well as end-users. Our customers care about LCP (Largest contentful paint) and for most of them, the image processing speed decides LCP. End users are not going to wait for image display for seconds.

We optimized image processing pipeline by first splitting it. There is a module which caches processed image and handles more than 70% of the requests. We split that module and wrote it in Golang (previously it was Node.js). That improved our average and tail latencies by 31% from 0.51 to 0.35 seconds.

Second, our internal caching was improved by switching caching layer from Nodejs to C++. It reduced cache store and fetch times by more than 50%.

Third, we improved our processing pipelines and hyper-optimized the image encoding pipeline to make sure even those newer formats are as fast as old formats like JPEG. This resulted in all images getting processed in same time regardless of the output format.

Last but not the least, we upgraded our servers to new generation. All the efforts combined brought down image processing time of fully uncached image from around 0.8 seconds to less than 0.3 seconds!

Video Processing and Streaming

Videos are hard. Period. No matter what you do, when video processing comes, it needs time and compute resources. We however felt that it needs to be as easy as the image processing. Quick and snappy.

| Area | Old | New |

|---|---|---|

| Processing | CPU | Latest Generation NVIDIA GPUs |

| Video Encoding | Pipeline based - almost same time as video duration | Instant Transcoding and 1/5th of video time for full processing |

| Auto Captions | NA | Captions generated at no extra cost and at same time as processing |

| Streaming | Open Source Player | Custom player reduced load time by 82% |

| Storage | Custom storage server | Amazon S3 |

| DRM | Off the shelf DRM | Custom-built golang DRM servers |

As you can see we built a lot of custom components for performance reasons. This has notable exception of storage. We found that storing videos in S3 is not only fast but also very reliable and cost effective. All in all, processing time saw 80% improvement and our video player now loads in 0.081 seconds compared to previous 0.4 seconds 😃

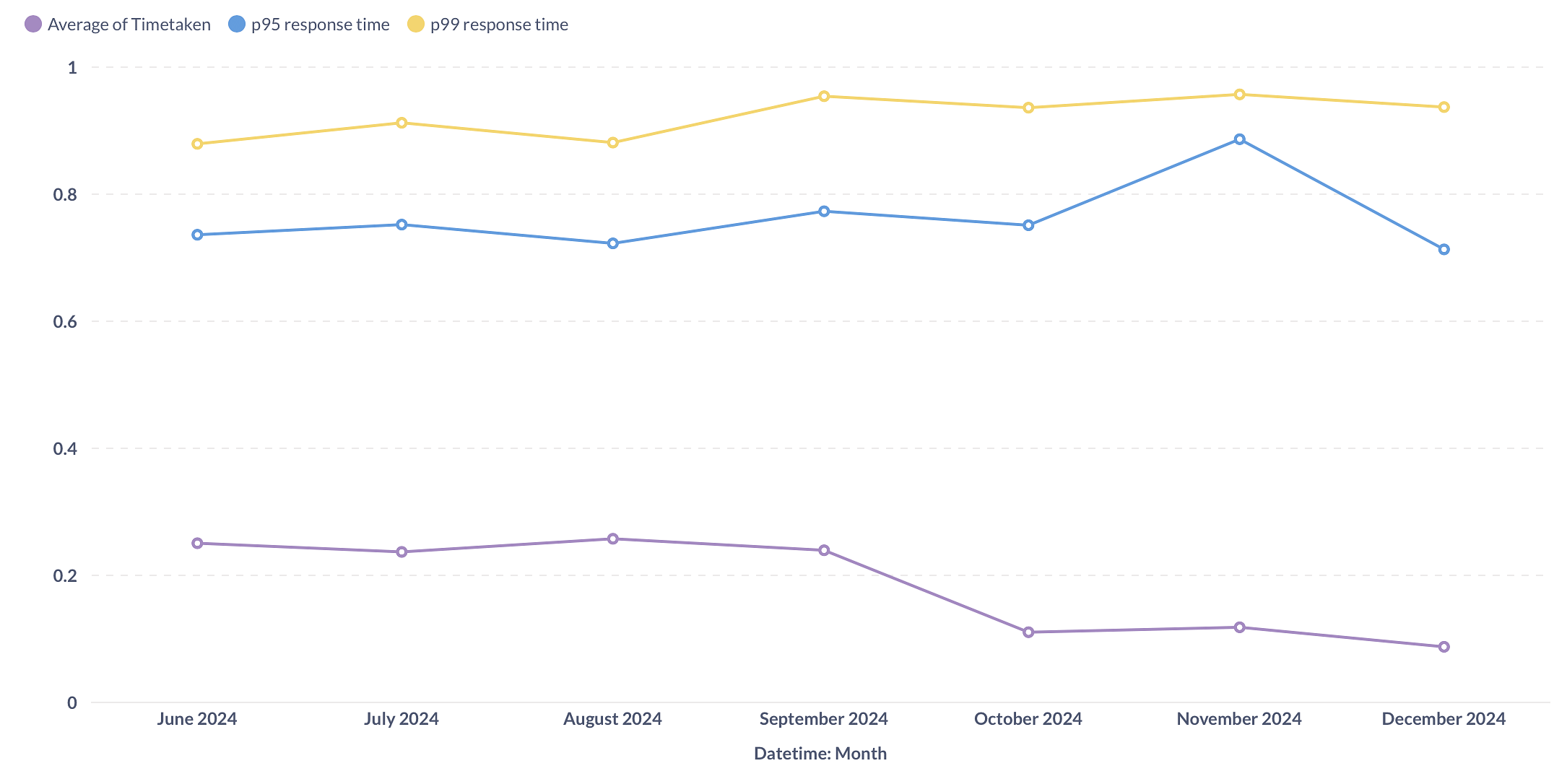

REST API

REST APIs are backbone to our entire dashboard and also helpful to our developers who process thousands of videos each day using API. The API has been written in Node.js since start and we are not changing it. We however optimized parts inside it to make it faster. We moved to ClickHouse from BigQuery for analytics. It provides a much better performance while extremely simplifying our code.

We also started leveraging ZSTD wire compression of MongoDB not only in API but across all modules. That helped reduce data transfer costs and reduced tail latencies compared to Snappy that we used before.

We reduced not only average response times but also reduced p95 and p99 response times by 45-50%.

Support, CI/CD and Crons

We have not much internal changes here. We just improved the third party services we use.

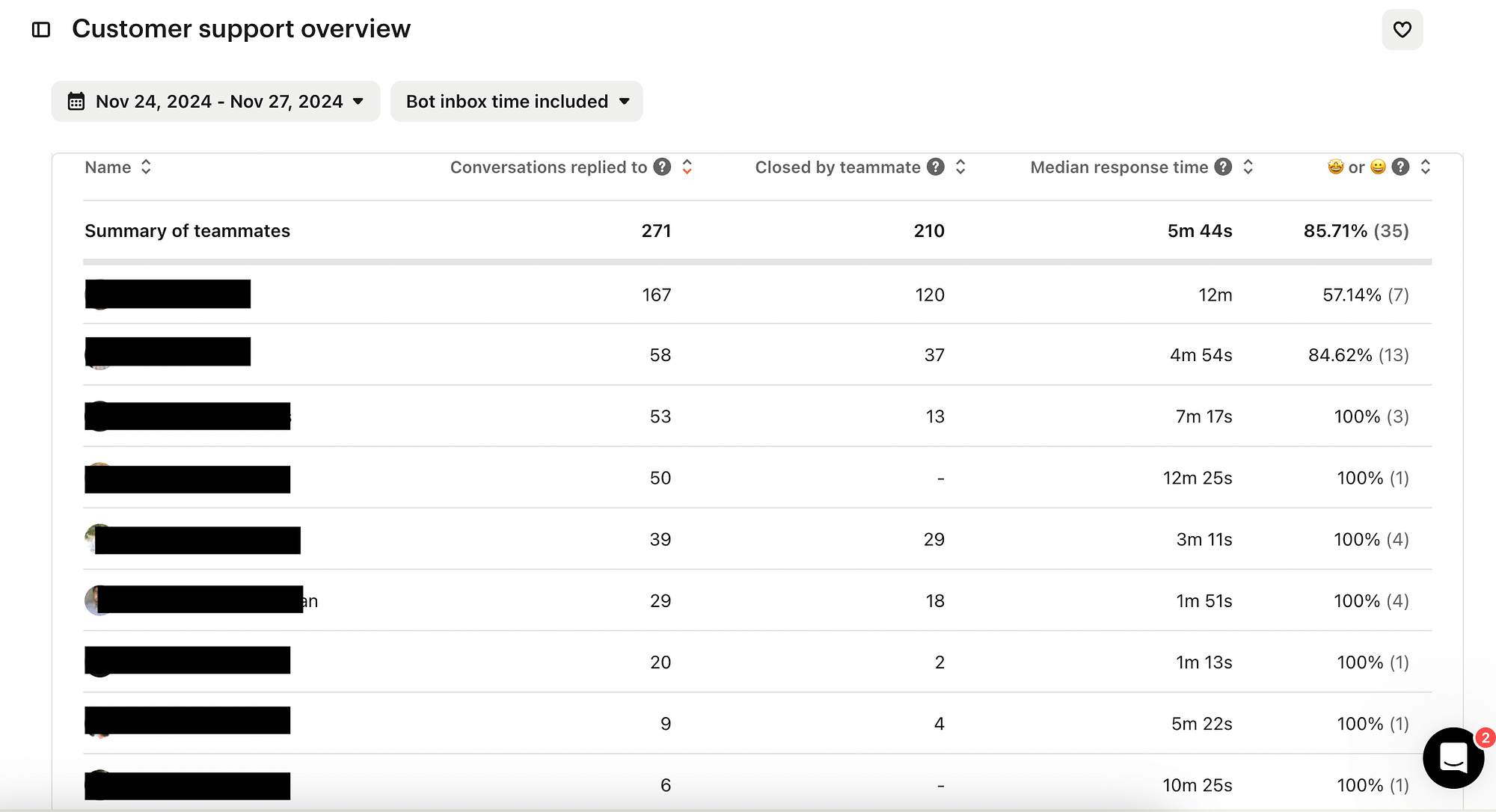

For support we switched to Intercom. It not only helped resolve a lot of customer queries automatically using AI bot, it also gave our teams all the tools they need to resolve a ticket quickly. It also gave us detailed stats on what needs improvement and how to do it. The result is insane. Here is a snapshot of support performance during Black Friday sale. A median response time of 5 minute 44 seconds with NPS score of 85%. Insane!

We were using Bitbucket as our code repo as well as CI/CD. If anyone used it they would know the pain. In March 2024, we just decided to move to Github. The entire shift was done in less than a month and our build times got 2x faster. Not only that, we now have much better tools like security scanning available in-built.

Last but not the least, our crons were all over the place. First we reduced the number of cron jobs by moving a lot of work over to ClickHouse. Materialized views and S3Cluster engines made our log processing cron-free.

Second, we consolidated all crons under a single Kubernetes cluster. This helped monitor their status and runtime. It was very quick to optimize a few of the slow running ones.

Standing on the shoulders of giants

The task was monumental but extremely rewarding. Our engineering team put a tremendous effort to make sure our mission speed ⚡️ is achieved in 2024.

This however would be impossible without the technologies that exist today. MongoDB, ClickHouse, Kubernetes, Golang, ReactJS and Node.js and are the open source projects without which this would be impossible.

Closing Thoughts

Gumlet became a lot faster in 2024 but our efforts don't stop here. Speed is a continuous process and this year it actually became part of our engineering culture. There are still some areas like p99 times of our video player which has not improved in 2024. We will address many such issues in 2025 and give our customer the fastest, easiest to use image and video streaming product.

Goodbye for now!