Why own GPU

At Gumlet, we process images and videos and we process them a lot. Currently, we serve more than 2.5 Billion images and videos in a single day. While our images are still processing on CPUs, we decided to take a different path for videos.

Right from 2020, we started using GPUs for our video processing needs. We knew early on that GPU's FLOPs per dollar are much higher than CPUs and GPUs are getting better and better.

Our entire software stack is built on CUDA and takes advantage of GPU processing when transcoding videos. The cherry on top? we can run ML models on the same GPUs which are processing videos.

Initially, the GPUs were rented in the cloud and we ran pretty fine with them. 2024 is however different and our GPU demand increased 8x since January! We had to seriously think about how we are going to run this operation in a cost-efficient manner.

Moreover, Ahrefs and DHH inspired us about the cost-effectiveness of self-hosting servers and ever since I read the book The Google Story, I was excited to put together our own server.

What did we deploy

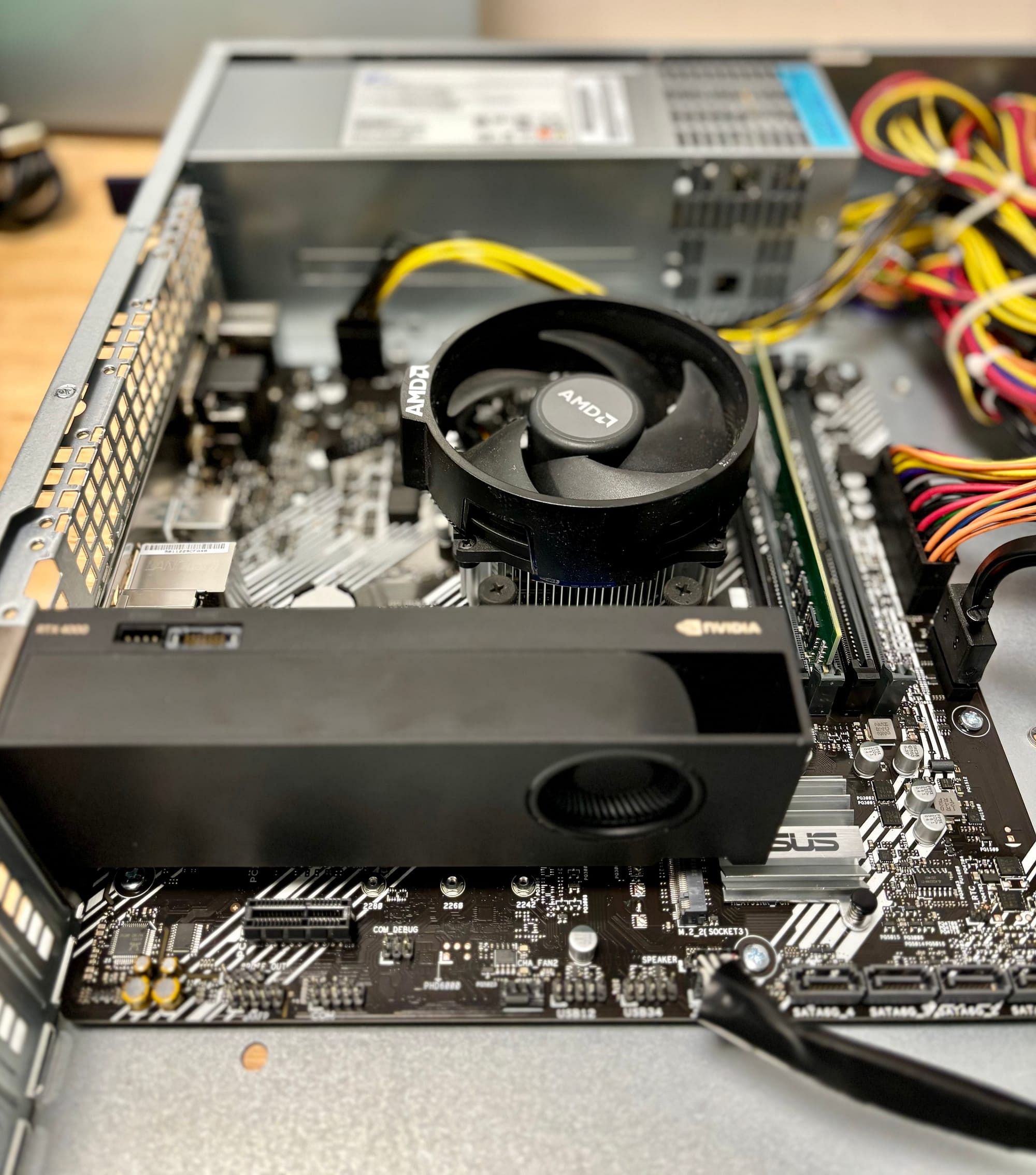

After evaluating options, we decided we were going to build our machines down to our exact specifications. Here is what we finalised:

- AMD 5700x processor

- ASUS B450MA motherboard

- 64 GB DDR4 RAM

- 512 GB NVMe SSD

- Nvidia RTX4000 ADA SFF GPU

- 2U rack mount with redundant power supply

You can check one of the servers in the cover image. Now, you might probably be thinking that these specs are not from a server lineup and you are correct. We took a little inspiration from the early days of Google and built our machines from commodity hardware. A single machine like this costs close to $2300 (a comparable machine g6.2xlarge costs $703 a month on AWS). Now, that's a bargain.

Nvidia RTX 4000 ADA SFF

The choice of graphics card was a big factor. We chose this card because it was the only SFF (small form factor) card on the market and it was the only one which would fit in the 2U rack. Another factor is that this card has enough GPU memory to do our video encoding tasks and also handle the ML workloads. We never went to data-center grade cards like L4 as they get much costlier and our FLOPs per dollar ratio will drop.

This was not without challenge though. We needed a relatively high quantity (20+) and these cards are not available in India. We had to go through our supplier and they helped us import these cards in a month.

What about hosting

Now this one is exciting. Generally, we would put our machines in a data center but due to the small quantity (one rack), no good data center was ready to help us quickly.

We however found that our co-working space - WeWork has an excellent server hosting solution. We could put the servers on the same floor as our office and they would provide redundant power supply, cooling and internet connection. This entire package is available at a much cheaper rate and we immediately jumped on this. Right now all servers are securely running in our office.

Monitoring and Deployments

This can be an uphill battle if you rely on the public cloud for these. Fortunately we always deploy on Kubernetes and use Grafana for monitoring. Both of these make sure we never have to worry about monitoring and deployments.

Closing thoughts

In the coming months, we will expand our racks and mostly move to a data center to host our servers. Meanwhile, ping us if you have some exciting self-hosting stories.